What are you waiting for? You need to learn the basics of the search engine so that you can start ranking higher on search engine results pages.

What is the anatomy of a search engine? Well, it's complicated. In this article, we're going to cover some of the most important basic components that make up an algorithm for getting ranked in search engines.

If you want to understand the step-by-step process of how search engines work, and also get your website ranking higher resulting in loads of traffic you’ll need to have a good understanding of the basics. You'll learn how search engines work from a beginner's point of view and you'll be able to understand how they discover your site, make it accessible, and also rank it.

Let's take a deep dive in!

Before boring you with all the technical stuff let's first understand what a web search engine is, why it is important, and its purpose.

Is a software system that is designed to return a list of relevant web pages in response to a search query.

There are three main features of a web search engine:

There are popular ones like Google, Bing, Yahoo, Yandex, and Baidu, but Google dominates the industry with over 85% market share.

A search engine is a gateway to just about everything we do online. If you are a business, there are some very real and specific benefits to having a consistent, ongoing search engine optimization strategy. For the first time in the history of marketing, users are offering up their actual intent through the words that they type and speak into search engines. So having a good understanding of how web search engines crawl, index, and rank your website will give you leverage above your competitors.

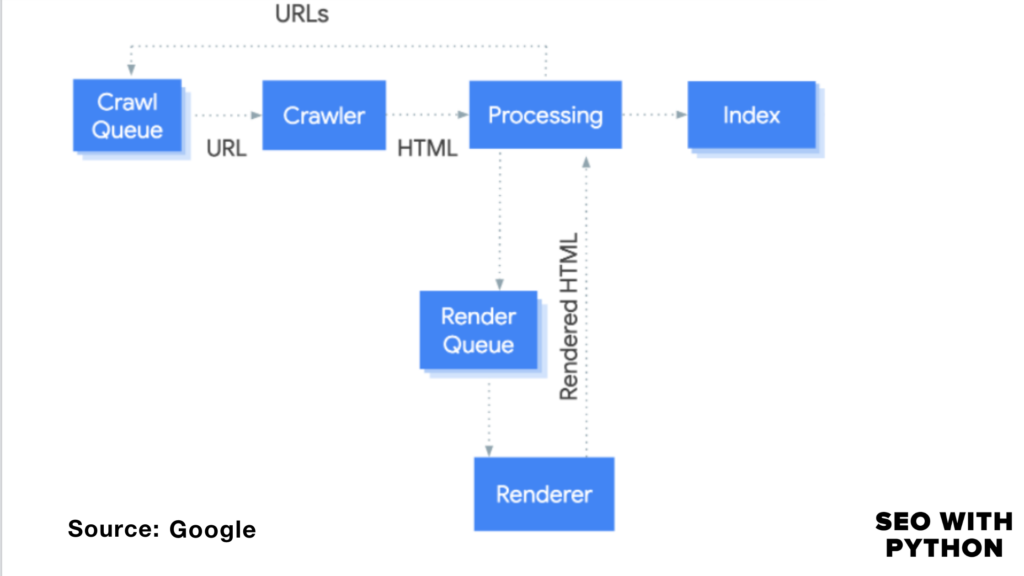

The roles of a search engine on the web can never be understated, discovering both new, old, and updated content on the internet which refers to the term crawling, processing the accessed pages for easy use called rendering, and then storing the information of the pages they've discovered and rendered into a database called index.

its ultimate goal is to make the web search experience better by providing a high-quality, comprehensive, insightful, authoritative, and relevant response to users' information requests.

All of this is independent of ranking.

Is a process search engines perform to find web pages by following links using (bots) to retrieve information to add to their index. The objective of crawling is to efficiently and quickly gather as many useful and accessible web pages as possible together with the link structure that interconnects them.

Is the process of turning hypertext into pixels. The code of a web page that a browser can interact with is turned into a useable aesthetic literally presenting it as a page.

Is the act of adding pages and information about them to an index. The search index is a database with well-structured data that the search engine retrieves information and serves to a user that is relevant to their query. if a page is indexed it can be served in response to a searcher query, if a page isn't in a search engine index, it can't rank.

Is a site position on the search engine result pages(SERP). The SERP is a list of results that the search engine returns and in theory is relevant to the searcher's query. There are so many ranking factors that determine content relevancy to a given query and for your site to appear at the top of the SERP the quality of the content on your page, the user experience, the quality, and quantity of links pointing to your page and many more will be checked to ascertain if your page merits the position.

Search engines do not search the entire web because there are portions of the web that are referred to as the hidden web and cannot be crawled/discovered, content that falls into this category are:

In short, search engines don't crawl all the pages on the web and don't index all the pages they crawl. To check if a page is indexed is to

Search engine bots are constantly crawling the web, for your page to be crawled it must be discovered first. Below is the basic mechanics of how search engines crawl the web.

There are three common ways search engines discover URLs to crawl.

Bots also commonly called (crawlers, web crawlers, spiders, spider bots, search engine bots, or search engine spiders) are search engine software that roams the web looking for website content that is discoverable, for it to be rendered and then indexed.

For your new website to rank on the search engines or appear on the search engine results pages(SERP) pages of your new website must be indexed. Indexing is dependent on crawling and rendering. Search engine bots are constantly crawling the web. They start at a known URL and crawl to other pages. Then all being well, they render and index what they find.

Google uses three algorithms for ranking websites :

An algorithm is a set of rules that a computer uses to automatically perform a specific task. In the context of SEO, the algorithm is what determines which websites Google ranks highest in search results.

Algorithms are essential because, without them, the search engine would not know how to rank millions of different websites. Google could just test and rank everything.

But what's the big deal? Why does it matter that Google ranks websites based on algorithms? The big deal is that everyone's results are going to look different on search engines. Some websites are going to rank well, others are going to rank poorly. And there are more than two hundred billion websites out there, which means there are hundreds of billions of different websites. That's the kind of thing that a lot of people would want to see sorted.

This article goes over the basics of how search engines work, and understanding the basic mechanics is the first step in ranking your pages in Google's SERP which in turn gives you more visibility and more organic traffic for your website.

Search engines are built with complex algorithms that each of them uses to determine search results. Search engines crawl the web and index every page on their servers. They crawl the web by collecting and analyzing information about web pages, including metadata, keywords, links, and images.

Once a search engine has collected enough information about a web page, they process the information using various algorithms, ranking the web pages based on relevance, freshness, and other factors. Once a page is ranked, it is displayed in search results.

Search engines allow users to search by text, image, video, or a combination of the two. So if your pages are not accessible by the search engine bots to be indexed then the functionality of your site will be hampered.

If you've got questions, please let me know in the comments or reach out to me on twitter

Warning: Undefined array key "preview" in /home/687898.cloudwaysapps.com/qwdynwkwdc/public_html/wp-content/plugins/oxygen/component-framework/components/classes/comments-list.class.php on line 102